文書の過去の版を表示しています。

Jupyter AI - JupyterLab の生成 AI 拡張機能

公式: Jupyter AI — Jupyter AI documentation 翻訳

ソースコード: jupyterlab/jupyter-ai: A generative AI extension for JupyterLab 翻訳

インストール

pipx

公式: Installation - Users — Jupyter AI documentation 翻訳

$ pipx inject jupyterlab jupyter-ai

or Python モジュールをビルドなしのバイナリオンリーでインストールしたい場合🤔

$ pipx inject jupyterlab jupyter-ai --pip-args='--only-binary=:all:'

injected package jupyter-ai into venv jupyterlab done! ✨ 🌟 ✨

モデル プロバイダーに応じて以下の Python モジュールをインストールする🤔

$ pipx inject jupyterlab ai21 anthropic boto3 cohere qianfan langchain-google-genai gpt4all huggingface_hub ipywidgets pillow langchain_nvidia_ai_endpoints openai

ipywidgets pillow langchain_nvidia_ai_endpoints openai injected package ai21 into venv jupyterlab done! ✨ 🌟 ✨ injected package anthropic into venv jupyterlab done! ✨ 🌟 ✨ injected package boto3 into venv jupyterlab done! ✨ 🌟 ✨ injected package cohere into venv jupyterlab done! ✨ 🌟 ✨ injected package qianfan into venv jupyterlab done! ✨ 🌟 ✨ injected package langchain-google-genai into venv jupyterlab done! ✨ 🌟 ✨ injected package gpt4all into venv jupyterlab done! ✨ 🌟 ✨ injected package huggingface-hub into venv jupyterlab done! ✨ 🌟 ✨ injected package ipywidgets into venv jupyterlab done! ✨ 🌟 ✨ injected package pillow into venv jupyterlab done! ✨ 🌟 ✨ injected package langchain-nvidia-ai-endpoints into venv jupyterlab done! ✨ 🌟 ✨ injected package openai into venv jupyterlab done! ✨ 🌟 ✨

モデル プロバイダー

| Provider | Provider ID | Environment variable(s) | Python package(s) | |

|---|---|---|---|---|

| AI21 | AI21 Labs | ai21 | AI21_API_KEY | ai21 |

| Anthropic | anthropic | ANTHROPIC_API_KEY | anthropic |

|

| Anthropic (chat) | anthropic-chat | ANTHROPIC_API_KEY | anthropic |

|

| Bedrock | Amazon Bedrock | bedrock | N/A | boto3 |

| Bedrock (chat) | bedrock-chat | N/A | boto3 |

|

| Cohere | cohere | COHERE_API_KEY | cohere |

|

| ERNIE-Bot | Chatbot developed by Baidu | qianfan | QIANFAN_AK, QIANFAN_SK | qianfan |

| Gemini | gemini | GOOGLE_API_KEY Get API key | Google AI Studio | langchain-google-genai |

|

| GPT4All | gpt4all | N/A | gpt4all |

|

| Hugging Face Hub | huggingface_hub | HUGGINGFACEHUB_API_TOKEN | huggingface_hub, ipywidgets, pillow |

|

| NVIDIA | NVIDIA AI | nvidia-chat | NVIDIA_API_KEY | langchain_nvidia_ai_endpoints |

| OpenAI | openai | OPENAI_API_KEY API keys - OpenAI API | openai |

|

| OpenAI (chat) | openai-chat | OPENAI_API_KEY | openai |

|

| SageMaker | Amazon SageMaker | sagemaker-endpoint | N/A | boto3 |

Jupyter AI もベンダー中立のポリシーで実装されている

Project Jupyter is vendor-neutral, so Jupyter AI supports LLMs from AI21, Anthropic, AWS, Cohere, HuggingFace Hub, and OpenAI. More model providers will be added in the future. Please review a provider’s privacy policy and pricing model before you use it.

Project Jupyter はベンダー中立であるため、Jupyter AI は AI21、Anthropic、AWS、Cohere、HuggingFace Hub、OpenAI の LLM をサポートします。今後さらにモデルプロバイダーが追加される予定です。プロバイダーを使用する前に、プロバイダーのプライバシー ポリシーと価格モデルを確認してください。

Generative AI in Jupyter. Jupyter AI, a new open source project… | by Jason Weill | Jupyter Blog より

GPT4All

$ mkdir ~/.cache/gpt4all

| 全体的に最高の高速チャットモデル | 素早い応答 チャットベースのモデル ミストラル AI によるトレーニング Nomic Atlas によってキュレーションされた OpenOrca データセットで微調整 商用利用が許可されている |

$ curl -LO --output-dir ~/.cache/gpt4all https://gpt4all.io/models/gguf/mistral-7b-openorca.Q4_0.gguf

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 3918M 100 3918M 0 0 10.8M 0 0:06:02 0:06:02 --:--:-- 11.1M

| モデルに従う最高の全体的な高速命令 | 素早い応答 ミストラル AI によるトレーニング 無修正 商用利用が許可されている |

$ curl -LO --output-dir ~/.cache/gpt4all https://gpt4all.io/models/gguf/mistral-7b-instruct-v0.1.Q4_0.gguf

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 3918M 100 3918M 0 0 10.5M 0 0:06:12 0:06:12 --:--:-- 10.6M

| 高品質の非常に高速なモデル | 最速の応答 命令ベース TII によるトレーニング Nomic AI による微調整 商用利用が許可されている |

$ curl -LO --output-dir ~/.cache/gpt4all https://gpt4all.io/models/gguf/gpt4all-falcon-q4_0.gguf

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 4015M 100 4015M 0 0 10.2M 0 0:06:31 0:06:31 --:--:-- 10.1M

| 全体的に非常に優れたモデル | 命令ベース Groovy と同じデータセットに基づく Groovy よりも低速ですが、応答の品質は高くなります Nomic AI によるトレーニング 商用利用はできません |

$ curl -LO --output-dir ~/.cache/gpt4all http://gpt4all.io/models/ggml-gpt4all-l13b-snoozy.bin

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 7759M 100 7759M 0 0 5859k 0 0:22:36 0:22:36 --:--:-- 4235k

$ curl -LO --output-dir ~/.cache/gpt4all https://gpt4all.io/models/ggml-gpt4all-j-v1.2-jazzy.bin

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 3609M 100 3609M 0 0 10.6M 0 0:05:37 0:05:37 --:--:-- 11.0M

$ curl -LO --output-dir ~/.cache/gpt4all https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 3609M 100 3609M 0 0 10.6M 0 0:05:40 0:05:40 --:--:-- 10.9M

| LocalDocs テキスト埋め込みモデル | LocalDocs 機能で使用する場合、検索拡張生成 (RAG) に使用されます。 |

$ curl -LO --output-dir ~/.cache/gpt4all https://gpt4all.io/models/gguf/all-MiniLM-L6-v2-f16.gguf

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 43.7M 100 43.7M 0 0 8356k 0 0:00:05 0:00:05 --:--:-- 9922k

参考

開発インストール

公式: Development install - Contributors — Jupyter AI documentation 翻訳

anyenv で Node.js をインストールする🤔

JupyterLab をインストールする🤔

参考: jupyter-ai/scripts/install.sh at main · jupyterlab/jupyter-ai

$ git clone https://github.com/jupyterlab/jupyter-ai.git

Cloning into 'jupyter-ai'... remote: Enumerating objects: 4239, done. remote: Counting objects: 100% (957/957), done. remote: Compressing objects: 100% (350/350), done. remote: Total 4239 (delta 723), reused 732 (delta 591), pack-reused 3282 Receiving objects: 100% (4239/4239), 8.08 MiB | 1.56 MiB/s, done. Resolving deltas: 100% (2766/2766), done.

$ micromamba create -n jupyter-ai_py311 -c conda-forge python=3.11 nodejs=20

nodefaults/linux-64 (check zst) Checked 1.1s

nodefaults/noarch (check zst) Checked 1.1s

nodefaults/linux-64 125.0 B @ 198.0 B/s 0.6s

nodefaults/noarch 116.0 B @ 118.0 B/s 1.0s

conda-forge/noarch 13.9MB @ 1.4MB/s 10.1s

conda-forge/linux-64 33.1MB @ 2.7MB/s 12.2s

Transaction

Prefix: /home/tomoyan/micromamba/envs/jupyter-ai_py311

Updating specs:

- python=3.11

- nodejs=20

Package Version Build Channel Size

───────────────────────────────────────────────────────────────────────────

Install:

───────────────────────────────────────────────────────────────────────────

+ _libgcc_mutex 0.1 conda_forge conda-forge 3kB

+ ld_impl_linux-64 2.40 h41732ed_0 conda-forge 705kB

+ ca-certificates 2024.2.2 hbcca054_0 conda-forge 155kB

+ libstdcxx-ng 13.2.0 h7e041cc_5 conda-forge 4MB

+ libgomp 13.2.0 h807b86a_5 conda-forge 420kB

+ _openmp_mutex 4.5 2_gnu conda-forge 24kB

+ libgcc-ng 13.2.0 h807b86a_5 conda-forge 771kB

+ libxcrypt 4.4.36 hd590300_1 conda-forge 100kB

+ libexpat 2.6.2 h59595ed_0 conda-forge 74kB

+ libzlib 1.2.13 hd590300_5 conda-forge 62kB

+ libffi 3.4.2 h7f98852_5 conda-forge 58kB

+ bzip2 1.0.8 hd590300_5 conda-forge 254kB

+ ncurses 6.4 h59595ed_2 conda-forge 884kB

+ libuv 1.46.0 hd590300_0 conda-forge 893kB

+ icu 73.2 h59595ed_0 conda-forge 12MB

+ openssl 3.2.1 hd590300_0 conda-forge 3MB

+ libuuid 2.38.1 h0b41bf4_0 conda-forge 34kB

+ libnsl 2.0.1 hd590300_0 conda-forge 33kB

+ xz 5.2.6 h166bdaf_0 conda-forge 418kB

+ tk 8.6.13 noxft_h4845f30_101 conda-forge 3MB

+ libsqlite 3.45.2 h2797004_0 conda-forge 857kB

+ zlib 1.2.13 hd590300_5 conda-forge 93kB

+ readline 8.2 h8228510_1 conda-forge 281kB

+ nodejs 20.9.0 hb753e55_0 conda-forge 17MB

+ tzdata 2024a h0c530f3_0 conda-forge 120kB

+ python 3.11.8 hab00c5b_0_cpython conda-forge 31MB

+ wheel 0.42.0 pyhd8ed1ab_0 conda-forge 58kB

+ setuptools 69.2.0 pyhd8ed1ab_0 conda-forge 471kB

+ pip 24.0 pyhd8ed1ab_0 conda-forge 1MB

Summary:

Install: 29 packages

Total download: 78MB

───────────────────────────────────────────────────────────────────────────

Confirm changes: [Y/n]

Transaction starting

_libgcc_mutex 2.6kB @ 6.7kB/s 0.4s

libffi 58.3kB @ 99.5kB/s 0.2s

ca-certificates 155.4kB @ 238.1kB/s 0.7s

libuuid 33.6kB @ 41.2kB/s 0.2s

ld_impl_linux-64 704.7kB @ 683.4kB/s 1.0s

libgomp 419.8kB @ 393.0kB/s 1.1s

readline 281.5kB @ 190.0kB/s 0.5s

libuv 893.3kB @ 466.4kB/s 1.3s

libxcrypt 100.4kB @ 42.9kB/s 0.4s

bzip2 254.2kB @ 91.6kB/s 0.4s

pip 1.4MB @ 357.1kB/s 2.4s

libstdcxx-ng 3.8MB @ 920.9kB/s 4.2s

tk 3.3MB @ 757.6kB/s 3.6s

tzdata 119.8kB @ 26.5kB/s 0.4s

_openmp_mutex 23.6kB @ 4.9kB/s 0.4s

libzlib 61.6kB @ 12.4kB/s 0.5s

libsqlite 857.5kB @ 170.3kB/s 1.1s

libnsl 33.4kB @ 6.4kB/s 0.5s

openssl 2.9MB @ 349.2kB/s 5.4s

libgcc-ng 770.5kB @ 90.7kB/s 3.5s

ncurses 884.4kB @ 89.5kB/s 1.4s

wheel 57.6kB @ 5.7kB/s 1.9s

setuptools 471.2kB @ 44.9kB/s 0.6s

zlib 92.8kB @ 8.4kB/s 1.0s

libexpat 73.7kB @ 6.5kB/s 0.9s

xz 418.4kB @ 33.8kB/s 1.3s

icu 12.1MB @ 794.2kB/s 10.0s

python 30.8MB @ 1.7MB/s 17.3s

nodejs 17.1MB @ 869.6kB/s 14.7s

Linking _libgcc_mutex-0.1-conda_forge

Linking ld_impl_linux-64-2.40-h41732ed_0

Linking ca-certificates-2024.2.2-hbcca054_0

Linking libstdcxx-ng-13.2.0-h7e041cc_5

Linking libgomp-13.2.0-h807b86a_5

Linking _openmp_mutex-4.5-2_gnu

Linking libgcc-ng-13.2.0-h807b86a_5

Linking libxcrypt-4.4.36-hd590300_1

Linking libexpat-2.6.2-h59595ed_0

Linking libzlib-1.2.13-hd590300_5

Linking libffi-3.4.2-h7f98852_5

Linking bzip2-1.0.8-hd590300_5

Linking ncurses-6.4-h59595ed_2

Linking libuv-1.46.0-hd590300_0

Linking icu-73.2-h59595ed_0

Linking openssl-3.2.1-hd590300_0

Linking libuuid-2.38.1-h0b41bf4_0

Linking libnsl-2.0.1-hd590300_0

Linking xz-5.2.6-h166bdaf_0

Linking tk-8.6.13-noxft_h4845f30_101

Linking libsqlite-3.45.2-h2797004_0

Linking zlib-1.2.13-hd590300_5

Linking readline-8.2-h8228510_1

Linking nodejs-20.9.0-hb753e55_0

Linking tzdata-2024a-h0c530f3_0

Linking python-3.11.8-hab00c5b_0_cpython

Linking wheel-0.42.0-pyhd8ed1ab_0

Linking setuptools-69.2.0-pyhd8ed1ab_0

Linking pip-24.0-pyhd8ed1ab_0

Transaction finished

To activate this environment, use:

micromamba activate jupyter-ai_py311

Or to execute a single command in this environment, use:

micromamba run -n jupyter-ai_py311 mycommand

$ micromamba activate jupyter-ai_py311 (jupyter-ai_py311) $ ./scripts/install.sh

トラブルシューティング

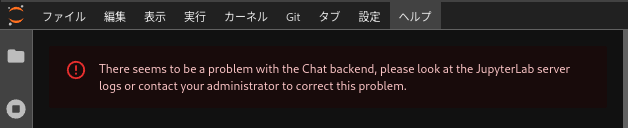

もしも「チャットバックエンドの問題」エラーで再設定できなくなったら...😢

公式: problem with the Chat backend · Issue #335 · jupyterlab/jupyter-ai 翻訳

There seems to be a problem with the Chat backend, please look at the JupyterLab server logs or contact your administrator to correct this problem.

Chat バックエンドに問題があるようです。JupyterLab サーバーのログを確認するか、管理者に連絡してこの問題を解決してください。

$ jupyter-lab --ip=0.0.0.0 --port=8888 --no-browser

[I 2024-03-12 06:29:13.731 ServerApp] ipyparallel | extension was successfully linked.

[I 2024-03-12 06:29:13.739 ServerApp] jupyter_ai | extension was successfully linked.

[I 2024-03-12 06:29:13.739 ServerApp] jupyter_lsp | extension was successfully linked.

[I 2024-03-12 06:29:13.747 ServerApp] jupyter_server_fileid | extension was successfully linked.

[I 2024-03-12 06:29:13.754 ServerApp] jupyter_server_mathjax | extension was successfully linked.

[I 2024-03-12 06:29:13.762 ServerApp] jupyter_server_terminals | extension was successfully linked.

[I 2024-03-12 06:29:13.770 ServerApp] jupyter_server_ydoc | extension was successfully linked.

[I 2024-03-12 06:29:13.779 ServerApp] jupyterlab | extension was successfully linked.

[I 2024-03-12 06:29:13.779 ServerApp] jupyterlab_git | extension was successfully linked.

[I 2024-03-12 06:29:13.779 ServerApp] nbdime | extension was successfully linked.

[I 2024-03-12 06:29:13.786 ServerApp] notebook_shim | extension was successfully linked.

[I 2024-03-12 06:29:13.825 ServerApp] notebook_shim | extension was successfully loaded.

[I 2024-03-12 06:29:13.827 ServerApp] Loading IPython parallel extension

[I 2024-03-12 06:29:13.828 ServerApp] ipyparallel | extension was successfully loaded.

[I 2024-03-12 06:29:13.829 AiExtension] Configured provider allowlist: None

[I 2024-03-12 06:29:13.829 AiExtension] Configured provider blocklist: None

[I 2024-03-12 06:29:13.829 AiExtension] Configured model allowlist: None

[I 2024-03-12 06:29:13.829 AiExtension] Configured model blocklist: None

[I 2024-03-12 06:29:13.829 AiExtension] Configured model parameters: {}

[I 2024-03-12 06:29:13.883 AiExtension] Registered model provider `ai21`.

[I 2024-03-12 06:29:13.884 AiExtension] Registered model provider `bedrock`.

[I 2024-03-12 06:29:13.884 AiExtension] Registered model provider `bedrock-chat`.

[I 2024-03-12 06:29:13.884 AiExtension] Registered model provider `anthropic`.

[I 2024-03-12 06:29:13.884 AiExtension] Registered model provider `anthropic-chat`.

[I 2024-03-12 06:29:13.884 AiExtension] Registered model provider `azure-chat-openai`.

[I 2024-03-12 06:29:13.884 AiExtension] Registered model provider `cohere`.

[I 2024-03-12 06:29:13.884 AiExtension] Registered model provider `gpt4all`.

[I 2024-03-12 06:29:13.884 AiExtension] Registered model provider `huggingface_hub`.

[I 2024-03-12 06:29:13.941 AiExtension] Registered model provider `nvidia-chat`.

[I 2024-03-12 06:29:13.941 AiExtension] Registered model provider `openai`.

[I 2024-03-12 06:29:13.941 AiExtension] Registered model provider `openai-chat`.

[I 2024-03-12 06:29:13.941 AiExtension] Registered model provider `qianfan`.

[I 2024-03-12 06:29:13.942 AiExtension] Registered model provider `sagemaker-endpoint`.

[I 2024-03-12 06:29:14.000 AiExtension] Registered embeddings model provider `bedrock`.

[I 2024-03-12 06:29:14.000 AiExtension] Registered embeddings model provider `cohere`.

[I 2024-03-12 06:29:14.000 AiExtension] Registered embeddings model provider `gpt4all`.

[I 2024-03-12 06:29:14.000 AiExtension] Registered embeddings model provider `huggingface_hub`.

[I 2024-03-12 06:29:14.000 AiExtension] Registered embeddings model provider `openai`.

[I 2024-03-12 06:29:14.000 AiExtension] Registered embeddings model provider `qianfan`.

[I 2024-03-12 06:29:14.012 AiExtension] Registered providers.

[I 2024-03-12 06:29:14.012 AiExtension] Registered jupyter_ai server extension

[W 2024-03-12 06:29:14.086 ServerApp] jupyter_ai | extension failed loading with message: ValueError('Failed to retrieve model')

Traceback (most recent call last):

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_server/extension/manager.py", line 359, in load_extension

extension.load_all_points(self.serverapp)

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_server/extension/manager.py", line 231, in load_all_points

return [self.load_point(point_name, serverapp) for point_name in self.extension_points]

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_server/extension/manager.py", line 222, in load_point

return point.load(serverapp)

^^^^^^^^^^^^^^^^^^^^^

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_server/extension/manager.py", line 150, in load

return loader(serverapp)

^^^^^^^^^^^^^^^^^

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_server/extension/application.py", line 474, in _load_jupyter_server_extension

extension.initialize()

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_server/extension/application.py", line 435, in initialize

self._prepare_settings()

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_server/extension/application.py", line 315, in _prepare_settings

self.initialize_settings()

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_ai/extension.py", line 238, in initialize_settings

learn_chat_handler = LearnChatHandler(**chat_handler_kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_ai/chat_handlers/learn.py", line 66, in __init__

self._load()

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_ai/chat_handlers/learn.py", line 70, in _load

embeddings = self.get_embedding_model()

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_ai/chat_handlers/learn.py", line 295, in get_embedding_model

return em_provider_cls(**em_provider_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyter_ai_magics/embedding_providers.py", line 133, in __init__

GPT4All.retrieve_model(model_name=model_name, allow_download=False)

File "/home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/gpt4all/gpt4all.py", line 193, in retrieve_model

raise ValueError("Failed to retrieve model")

ValueError: Failed to retrieve model

[I 2024-03-12 06:29:14.093 ServerApp] jupyter_lsp | extension was successfully loaded.

[I 2024-03-12 06:29:14.093 FileIdExtension] Configured File ID manager: ArbitraryFileIdManager

[I 2024-03-12 06:29:14.093 FileIdExtension] ArbitraryFileIdManager : Configured root dir: /home/tomoyan

[I 2024-03-12 06:29:14.093 FileIdExtension] ArbitraryFileIdManager : Configured database path: /home/tomoyan/.local/share/jupyter/file_id_manager.db

[I 2024-03-12 06:29:14.094 FileIdExtension] ArbitraryFileIdManager : Successfully connected to database file.

[I 2024-03-12 06:29:14.094 FileIdExtension] ArbitraryFileIdManager : Creating File ID tables and indices with journal_mode = DELETE

[I 2024-03-12 06:29:14.094 FileIdExtension] Attached event listeners.

[I 2024-03-12 06:29:14.095 ServerApp] jupyter_server_fileid | extension was successfully loaded.

[I 2024-03-12 06:29:14.096 ServerApp] jupyter_server_mathjax | extension was successfully loaded.

[I 2024-03-12 06:29:14.097 ServerApp] jupyter_server_terminals | extension was successfully loaded.

[I 2024-03-12 06:29:14.098 ServerApp] jupyter_server_ydoc | extension was successfully loaded.

[I 2024-03-12 06:29:14.103 LabApp] JupyterLab extension loaded from /home/tomoyan/.local/share/pipx/venvs/jupyterlab/lib64/python3.12/site-packages/jupyterlab

[I 2024-03-12 06:29:14.103 LabApp] JupyterLab application directory is /home/tomoyan/.local/share/pipx/venvs/jupyterlab/share/jupyter/lab

[I 2024-03-12 06:29:14.104 LabApp] Extension Manager is 'pypi'.

[I 2024-03-12 06:29:14.142 ServerApp] jupyterlab | extension was successfully loaded.

[I 2024-03-12 06:29:14.153 ServerApp] jupyterlab_git | extension was successfully loaded.

[I 2024-03-12 06:29:14.245 ServerApp] nbdime | extension was successfully loaded.

[I 2024-03-12 06:29:14.246 ServerApp] ローカルディレクトリからノートブックをサーブ: /home/tomoyan

[I 2024-03-12 06:29:14.246 ServerApp] Jupyter Server 2.13.0 is running at:

[I 2024-03-12 06:29:14.246 ServerApp] http://highway-x.fireball.local:8888/lab

[I 2024-03-12 06:29:14.246 ServerApp] http://127.0.0.1:8888/lab

[I 2024-03-12 06:29:14.246 ServerApp] サーバを停止し全てのカーネルをシャットダウンするには Control-C を使って下さい(確認をスキップするには2回)。

[I 2024-03-12 06:29:14.963 ServerApp] Skipped non-installed server(s): bash-language-server, dockerfile-language-server-nodejs, javascript-typescript-langserver, jedi-language-server, julia-language-server, pyright, python-language-server, python-lsp-server, r-languageserver, sql-language-server, texlab, typescript-language-server, unified-language-server, vscode-css-languageserver-bin, vscode-html-languageserver-bin, vscode-json-languageserver-bin, yaml-language-server

[W 2024-03-12 06:29:17.122 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 86.18ms referer=None

[W 2024-03-12 06:29:21.092 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.26ms referer=None

[W 2024-03-12 06:29:25.946 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.51ms referer=None

[W 2024-03-12 06:29:28.094 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.02ms referer=None

[W 2024-03-12 06:29:30.515 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.18ms referer=None

[W 2024-03-12 06:29:34.732 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.11ms referer=None

[W 2024-03-12 06:29:40.609 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 3.83ms referer=None

[W 2024-03-12 06:29:45.902 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.25ms referer=None

[W 2024-03-12 06:29:51.701 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 2.66ms referer=None

[W 2024-03-12 06:29:54.176 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.09ms referer=None

[W 2024-03-12 06:29:58.953 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 1.75ms referer=None

[W 2024-03-12 06:30:02.606 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 5.21ms referer=None

[W 2024-03-12 06:30:08.093 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.43ms referer=None

[W 2024-03-12 06:30:12.383 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 3.33ms referer=None

[W 2024-03-12 06:30:16.697 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 5.08ms referer=None

[W 2024-03-12 06:30:18.842 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.15ms referer=None

[W 2024-03-12 06:30:22.020 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.38ms referer=None

[W 2024-03-12 06:30:26.797 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.24ms referer=None

[W 2024-03-12 06:30:30.129 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 2.27ms referer=None

[W 2024-03-12 06:30:33.787 ServerApp] 404 GET /api/ai/chats (123d23ghj72149e89021f2dd23d6fc32@127.0.0.1) 4.49ms referer=None

^C[I 2024-03-12 06:30:34.769 ServerApp] 中断しました

[I 2024-03-12 06:30:34.769 ServerApp] ローカルディレクトリからノートブックをサーブ: /home/tomoyan

0 個のアクティブなカーネル

Jupyter Server 2.13.0 is running at:

http://highway-x.fireball.local:8888/lab

http://127.0.0.1:8888/lab

Shut down this Jupyter server (y/[n])? ^C[C 2024-03-12 06:30:35.048 ServerApp] シグナル 2 を受信。停止します

[I 2024-03-12 06:30:35.049 ServerApp] Shutting down 11 extensions

[I 2024-03-12 06:30:35.050 AiExtension] Closing Dask client.

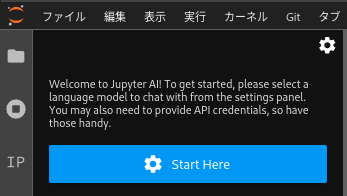

Jupyter AI の 設定を削除して再設定する😊

$ ll ~/.local/share/jupyter/jupyter_ai

合計 8 -rw-r--r-- 1 tomoyan tomoyan 194 3月 12 06:29 config.json -rw-r--r-- 1 tomoyan tomoyan 1216 3月 9 09:19 config_schema.json drwxr-xr-x 1 tomoyan tomoyan 0 3月 9 09:19 indices

$ rm ~/.local/share/jupyter/jupyter_ai/config.json $ jupyter-lab --ip=0.0.0.0 --port=8888 --no-browser

Could not load vector index from disk.

[E 2024-03-12 11:05:44.478 AiExtension] Could not load vector index from disk.

参考文献

Generative AI in Jupyter. Jupyter AI, a new open source project… | by Jason Weill | Jupyter Blog 翻訳

AI | Forbes JAPAN 公式サイト(フォーブス ジャパン)